(Discovery)

In the Hitchhiker's Guide to the Galaxy, interstellar travellers get around the language barrier by sticking a Babel Fish in their ears. The listener hears a translation of whatever is said in the speaker's own voice.

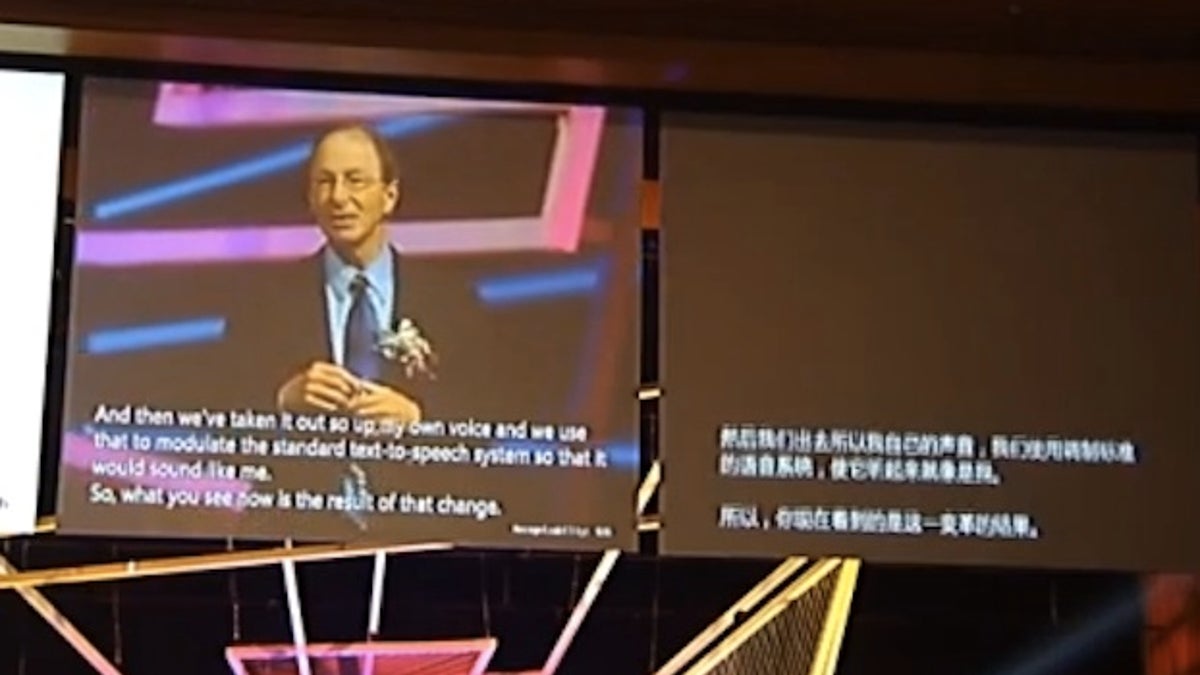

Microsoft is attempting to build a language translator that does just that. Rick Rashid, Microsoft's chief research officer, demonstrated the system at a presentation in Tianjin, China, on Oct. 25. He said it uses a technique called "deep neural networks," which is a way of connecting computers so that they work more like a human brain. During the presentation, Rashid demonstrated the machine and when his own voice repeated a phrase in Chinese, the crowd roared with applause.

[pullquote]

Top Twitter Takedown Tweets: Photos

Most language translation systems incorporate two parts: speech recognition and statistical models to get the right meaning for the words. That's how Google Translate works, for example. But getting a machine to understand a person having a conversation, is a tougher problem. That's one reason having a translator that speaks like a real person helps -- at least if the sound and rhythm are there, it's easier to parse out meaning when mistakes happen.

Rashid said the neural networks system improves the way the machine learns, supplementing the statistical models. It reduces the error rate, which is typically around 20 to 25 percent, to 15 percent.

But it's not flawless, as Rashid's talk revealed. At one point, the text on the screen behind him said "about one air out of every four or five words," when the word he wanted was "error." About a minute and a half later, the text said, "Take the text that comes from my voice, input dot iii translation system. It really happen to stop." ("It takes the text that comes from my voice and puts it into the translation system. It really happens in two steps.")

Translation App Ready For 2012 Olympics

These are not big problems in themselves. The audience in Tianjin could, of course, hear Rashid speak and see the English text at the same time. Absent that, those parts of his speech wouldn't likely make sense in Chinese. English speakers all know that "error" and "air" sound similar and can correct for that in their heads. A computer can't, at least not yet. The accuracy was good enough that anyone who could not read or understand English could have followed along, even with the glitches.

Microsoft is building on technologies that it has already introduced into its phones, and the ability to translate without being connected to the Internet is arguably more useful than hearing a translation to another language in one's own voice. NTT DoCoMo also has a real-time translator, and Google has a translation app that works in speech-to-speech mode with a smartphone.

The technology shows that machine translation has come a long way. And even with their problems the translators are considerably less icky than sticking a Babel Fish in your ear.