Dana Perino: What made Biden think this was a good idea?

'The Five' co-hosts discuss the White House's latest initiatives to lower the risks of artificial intelligence and Vice President Kamala Harris meeting with tech executives to discuss risks the technology poses to society.

A House Democrat proposed legislation this week that would require political campaign ads to make it clear to viewers when generative artificial intelligence is used to produce video or images in those ads, an idea that is a response to an AI-generated ad against President Biden that was released last week.

Rep. Yvette Clarke, D-N.Y., said in a statement introducing her bill that AI has become a factor in the upcoming campaign and needs to be regulated so people can understand what they hear and see on television.

"The upcoming 2024 election cycle will be the first time in U.S. history where AI generated content will be used in political ads by campaigns, parties, and Super PACs," she said. "Unfortunately, our current laws have not kept pace with the rapid development of artificial intelligence technologies."

Rep. Yvette Clarke, D-N.Y., proposed legislation to regulate campaign ads by making them reveal whether AI was used to produce graphics or videos. (Photo by Jabin Botsford/The Washington Post via Getty Images)

"If AI-generated content can manipulate and deceive people on a large scale, it can have devastating consequences for our national security and election security," she added.

An aide to Clarke acknowledged that the bill was prompted by the release of the Republican National Committee's AI-generated ad called "Beat Biden," which depicted a dystopian near-future in which President Biden wins re-election in 2024. The ad shows AI-produced images and videos of Taiwan being overrun by Chinese forces, economic collapse in the U.S., a surge in illegal immigrants at the southern border and the shuttering of the entire city of San Francisco due to rampant crime.

The aide said the fact that the ad came from the GOP side is irrelevant, and shows that AI is now entering the political world and will soon be used by both major parties. "It showed us the top dogs are ready," he told Fox News Digital.

After the GOP ad was released, the Democratic National Committee replied by calling it "remarkably bad" and saying that Republicans had to "make up" images about a future Biden presidency because they "can’t argue with President Biden’s results."

REGULATE AI? GOP MUCH MORE SKEPTICAL THAN DEMS THAT GOVERNMENT CAN DO IT RIGHT: POLL

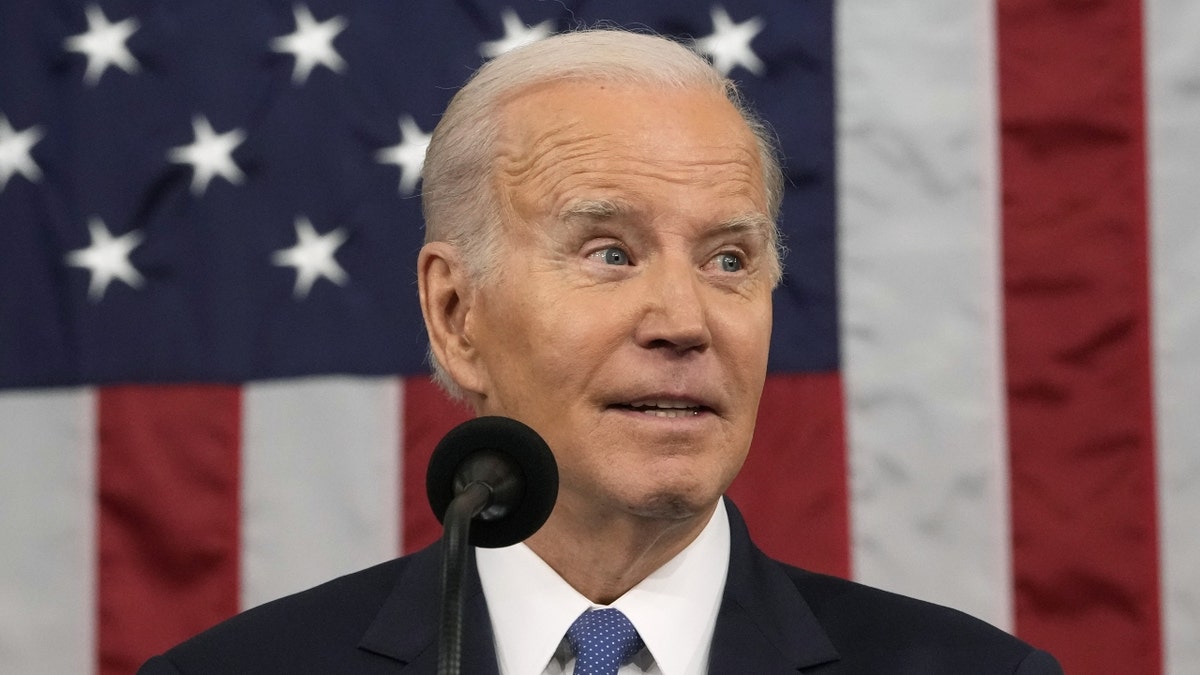

The Republican National Committee ran an ad against President Biden using AI that depicted a dystopian future after Biden's 2024 re-election. (Photographer: Jacquelyn Martin/AP/Bloomberg via Getty Images)

Clarke’s legislation would amend the law governing the Federal Election Commission to require TV and online ads to disclose when generative AI is used to create video or images, and warns that AI-generated ads have the potential for "spreading misinformation and disinformation at scale and with unprecedented speed."

Her bill would require the FEC to set out regulations that explain when an AI-generated image or video would need to be flagged. The House aide explained that the intent is to give the FEC flexibility on setting the rules, which might only focus on informing viewers when AI was involved in producing major or prominent elements of an ad, and allow exemptions for minor or less prominent AI features.

The bill says any covered election communication that includes AI-generated video or images must also include "a statement that the communication contains such an image or footage," and that statement must be presented in a "clear and conspicuous manner."

Under the bill, failure to make a required disclosure of the use of AI would carry the same penalty as the failure to disclose who paid for a campaign ad. Clarke is proposing that these changes take effect on January 1, 2024.

CHINA FUMES AS BIDEN PLOTS TO STARVE IT OF AI INVESTMENT: ‘SCI-TECH BULLYING’

The RNC, run by Chairwoman Ronna McDaniel, was criticized by the Democratic National Committee for inventing stories about Biden for its anti-Biden ad. (David McNew/Getty Images)

CLICK HERE TO GET THE FOX NEWS APP

Clarke's bill is one of several proposals by Congress to start getting a handle on AI and how to regulate it. Leaders in both the House and Senate are calling in experts to discuss the implications of this fast-evolving and widely dispersed technology, and several Biden administration agencies are beginning to tackle ways to regulate AI using their existing regulatory authorities.