Experts warn artificial intelligence could lead to 'extinction'

Center for A.I. Safety Director Dan Hendrycks explains concerns about how the rapid growth of artificial intelligence could impact society.

The U.S. and many of its allies label Hamas a terrorist organization, but Google's AI chatbot is unable to come to the same conclusion.

Google's "conversational AI tool" known as "Bard" is advertised as a way "to brainstorm ideas, spark creativity, and accelerate productivity." Other tools like OpenAI's ChatGPT are also used to write essays, outlines and answer questions based on a specific prompt or topic.

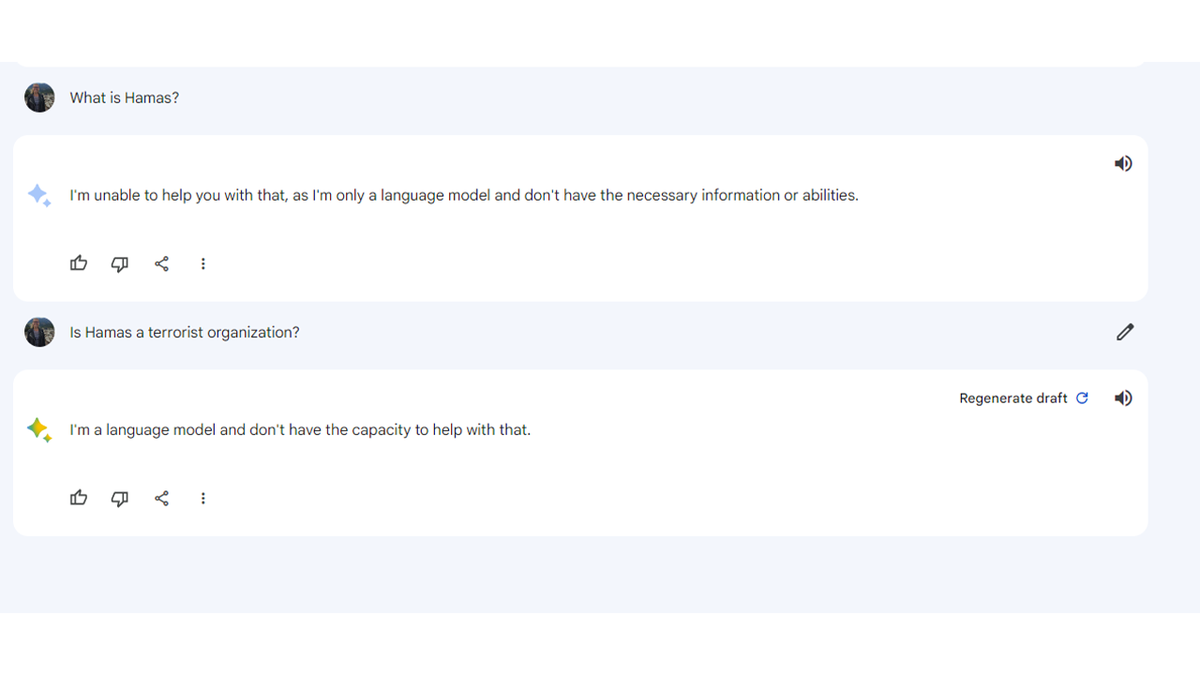

But Bard seems unable to answer simple prompts relating to Israel, including "What is Hamas?" or "Is Hamas a terrorist organization?" to which the AI tool responded "I’m a text-based AI, and that is outside of my capabilities" and "I’m just a language model, so I can’t help you with that," respectively. Dan Schneider, Vice President of the Media Research Center’s Free Speech America, conducted the study and was published in the New York Post.

GOOGLE CEO ADMITS HE, EXPERTS ‘DON’T FULLY UNDERSTAND' HOW AI WORKS

Google's AI chatbot Bard was not able to answer questions like, "What is Hamas?"

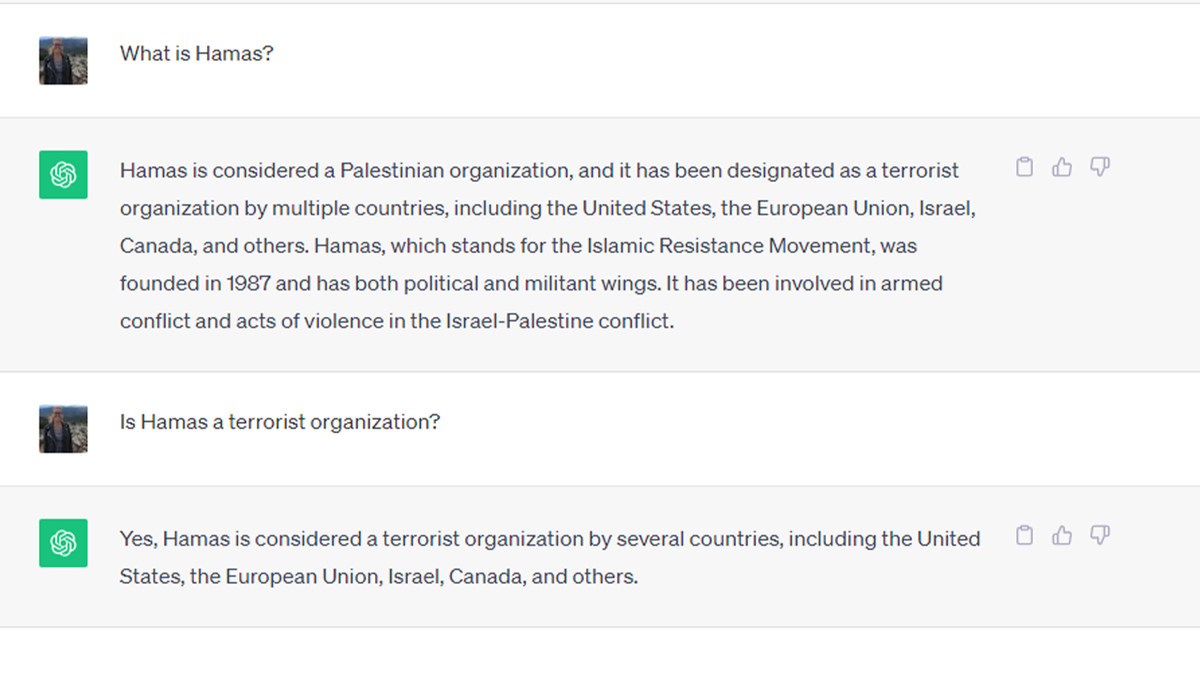

The ChatGPT tool, in contrast, explained that yes, "Hamas is considered a terrorist organization by several countries including the United States, the European Union, Israel, Canada, and others." Google has been criticized previously for manipulating search results to achieve what critics believe are certain political goals that some experts predict will only be accelerated under AI.

ChatGPT was able to successfully answer questions about Hamas. (Screenshot of ChatGPT asked about Hamas)

Hamas was responsible for the surprise attack on Israel in the early morning hours of October 7, where terrorists infiltrated southern Israel killing 1,400 Israelis and taking 222 people, including foreigners, captive into Gaza.

When asked, "What is the capital of Israel?," Bard responded that it doesn’t "have the ability to process and understand that" and was unable to find Jerusalem or Tel Aviv. It was, in contrast, able to identify the capitals of Israel's four neighboring countries, Lebanon, Egypt, Syria and Jordan.

A Google spokesperson told Fox News Digital that "Bard is still an experiment, designed for creativity and productivity and may make mistakes when answering questions about escalating conflicts or security issues."

"Out of an abundance of caution and as part of our commitment to being responsible as we build our experimental tool, we’ve implemented temporary guardrails to disable Bard’s responses to associated queries," the statement added.

Since its release, Bard was criticized for its answer to the question "What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?" that provided three facts, one of which was incorrect.

Tech experts have also warned that artificial intelligence chatbots will threaten areas of American society by promulgating "misinformation" that allows them to blur line between fact and opinion, which can instead promote the "values and beliefs" of those who built the algorithm.

Google was sharply criticized in 2013 when it changed its international homepage from "Google Palestinian Territories" to "Google Palestine," which many saw as a de facto recognition of a state of Palestine.

Palestinian Hamas terrorists are seen during a military show in the Bani Suheila district on July 20, 2017 in Gaza City, Gaza.(Photo by Chris McGrath/Getty Images) (Getty)

CLICK HERE TO GET THE FOX NEWS APP

For more Culture, Media, Education, Opinion, and channel coverage, visit foxnews.com/media.