Will Facebook, YouTube and Twitter police their content?

Kurt the 'CyberGuy' comments on new services and apps that do.

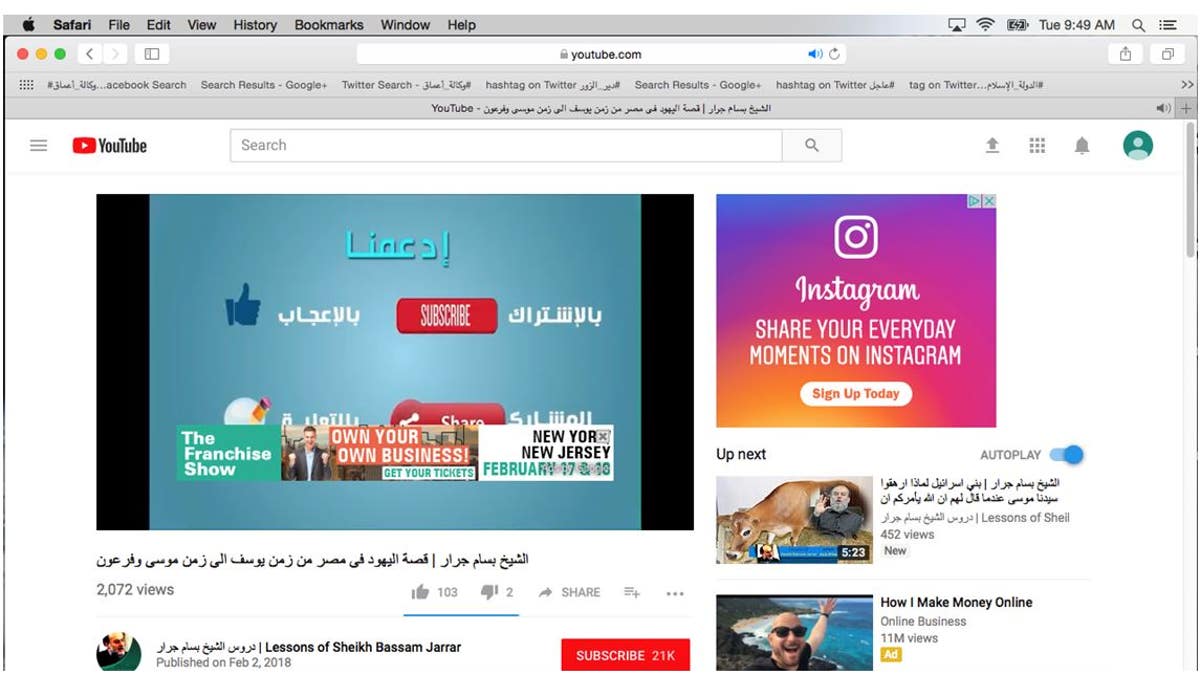

YouTube, which has suffered a rash of recent embarrassments over content that is violent, hurtful to children or part of a “fake news” scam -- can’t even seem to protect a fellow tech company advertising on its platform.

A recent screenshot from the Google-owned platform shows an advertisement for Instagram appearing alongside a video from a Muslim cleric who was expelled from Israel in 1993 because of his suspected membership in Hamas.

The video is called “Lessons of Sheikh Bassam Jarrar” and the Instagram ad appears along the right-hand side of the video. Hamas is regarded as a terrorist organization by the U.S., Israel and the European Union.

YouTube, which has faced intense scrutiny from Capitol Hill lawmakers, now claims its machine learning technology enables it to take down “nearly 70 percent of violent extremist content within 8 hours of upload.”

Still, the fact that they can’t even protect another tech firm from exposure to extreme content is a bad sign, according to experts.

YouTube's systems allow Instagram ads to appear next to extreme content. (YouTube/Courtesy Eric Feinberg)

GERMAN COURT RULES FACEBOOK'S REAL NAME POLICY IS ILLEGAL

“YouTube cannot protect a Facebook company, Instagram, from appearing on extremist content,” said Eric Feinberg, a founding partner of deep web analysis company GIPEC. “If Google cannot protect Facebook, how can any brand expect to be protected from their ad appearing with extremist content.”

The video-sharing platform is also under the gun overseas. A draft European Commission document that was published online Tuesday calls for companies to remove posts promoting terrorism within one hour after receiving complaints.

Platforms should make “swift decisions as regards possible actions with respect to illegal content online without being required to do so on the basis of a court order or administrative decision,” the draft said.

Separate from the issue of terrorist content, YouTube is also part of a European Commission-led agreement to take down posts containing hate speech within 24 hours after being notified.

UNILEVER THREATENS FACEBOOK, GOOGLE WITH ONLINE ADVERTISING CUTS

The tech giant has cracked down on what it calls “borderline videos” -- content posted that espouses hateful or supremacist views, but is not technically in violation of the site’s community guidelines against direct calls to violence -- and they will now be harder to find, won’t be recommended or monetized and won’t have features like comments, suggested videos and likes.

More than 400 hours of content is uploaded every minute to the platform, which has more than 1.5 billion logged-in users, according to YouTube CEO Susan Wojcicki.

In addition to using computers, YouTube is using human experts to help flag problematic videos. They've added 15 non-governmental organizations, including the Anti-Defamation League, the No Hate Speech Movement and the Institute for Strategic Dialogue.

YouTube star Paul Logan prompted a backlash and was temporarily banned from the site after posting a video in Japan showing the body of an apparent suicide victim. More recently, he came under fire for uploading a video where he used a stun gun on rats. In addition, the company recently banned the viral Tide Pod challenge videos after a slew of complaints and instances of young people ingesting the laundry detergent.

Fox News’ Chris Ciaccia contributed to this report.